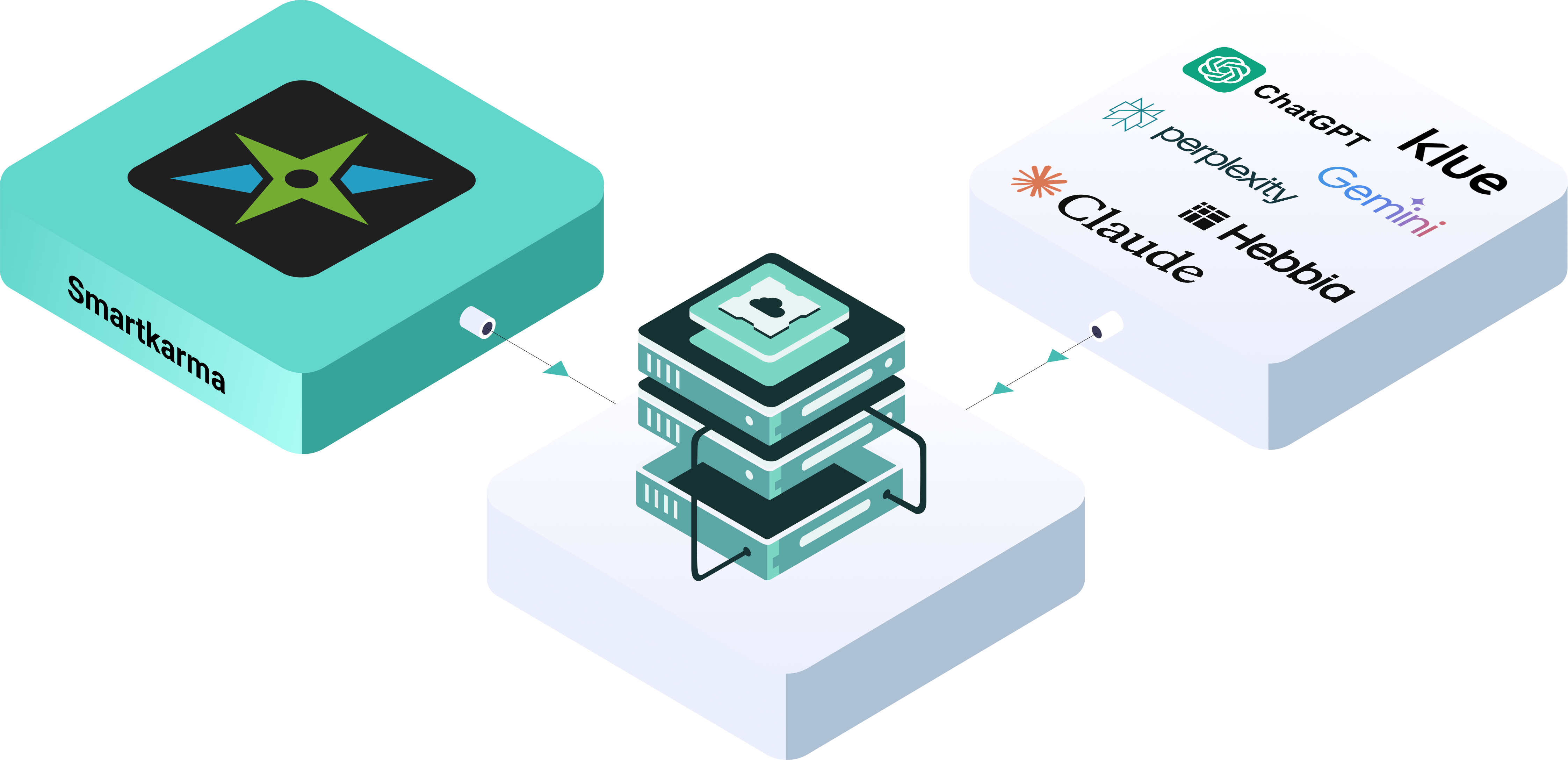

"AI scrapes the web.

Smartkarma taps the source."

Smartkarma vs AI Tools:

ChatGPT, Gemini, Perplexity, Claude, Hebbia, and Klue

Real-Time, Primary Insight, Data and Access from Trusted Sources. Not Just AI Outputs

Summary

Smartkarma brings together a vetted community of analysts and specialist data providers to deliver real-time, independent insight, specialist alternative data and analyst access that goes way beyond what large language models can offer. In fact, Smartkarma embeds AI-tools across its platform to supercharge your productivity.

Key Takeaways

Details

AI platforms such as ChatGPT, Gemini, Perplexity, Claude, Hebbia, and Klue have made it easier to access and summarise large volumes of information. However, these tools are not investment research platforms with proprietary inputs. Their outputs depend on publicly available data and language model training, without domain vetting, institutional accountability, or live market insight.

Smartkarma provides investors with access to a network of experienced, independent analysts who produce real-time research on differentiated, dollar-dense topics. Unlike AI platforms, Smartkarma’s content is authored by identifiable experts with track records, offering depth, context, and accountability that automated tools cannot replicate.

Beyond content, Smartkarma enables direct engagement between investors, analysts, and corporate IR teams through discussions and private chat, fostering two-way interaction that static AI outputs do not offer. The platform also integrates specialist alternative datasets, particularly across APAC markets, to provide a richer, more actionable research experience.

Industry Findings

“Off-the-shelf LLMs experience serious hallucination behaviors in financial tasks.”

A study titled “Deficiency of Large Language Models in Finance: An Empirical Examination of Hallucination” found that generic LLMs often “hallucinate” (i.e. invent or fabricate data) when handling finance-related tasks. (arXiv)

“LLMs used for investment advice induce increased portfolio risks across multiple risk dimensions.”

Research shows that AI models, when used for investment advice, aggravate risks like sector clustering, trend-chasing, and allocation bias. (PMC)

“LLM financial outputs had multiple arithmetic mistakes and lacked common sense.”

In one analysis, general LLM responses included wildly implausible numbers (e.g. projecting returns of 11,878 % for a life insurance policy), illustrating how these systems can stray from basic financial logic. (Financial Planning Association)

“The failure rate for AI projects can run as high as 80%.”

In the banking / financial domain, many AI initiatives never reach production or fall short of promised outcomes — a staggering failure statistic that hints at model risk, implementation risk, and overreach. (The Financial Brand)

“AI tools mostly fumble basic financial tasks — all averaged below 50 % accuracy.”

A recent study testing 22 AI models on real-world finance tasks found that even the best model achieved only ~48.3 % accuracy; many models scored far lower. (The Washington Post)

“Even the strongest current AI finance models averaged under 50% accuracy in critical tasks — a sobering reminder: confidence and fluency are not substitutes for domain credibility.”